Robots: Cute or creepy?

Given the various possible fields of application and strengths, you probably wonder why artificial intelligence is not spreading more quickly in business and our private life. This is a legitimate question and points to the major challenges and limitations which prevent AI from being adopted on a large scale.

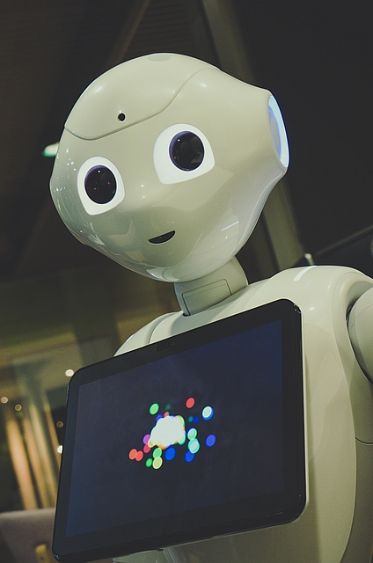

The fact that most people are biased when it comes to AI is a significant problem. Most of us think that humanoid robots with big eyes and childlike characteristics like Pepper are cute. Other than that, we are mostly afraid of robots.

This is also because most of us associate AI with characteristics seen in science-fiction films which have been attributed to it by screenwriters and directors for dramaturgical reasons – think robots and software aiming at subjugating or eliminating the entire human race. These horror scenarios made in Hollywood are extremely unrealistic.

Less intelligent than you might think

Another problem is the term ‚artificial intelligence‘ which is not exactly true. Robots are not ‚intelligent‘ in terms of ‚being able to think abstractly and rationally and deriving purposeful actions‘, and it is questionable if they ever will be.

As we have seen in the third article of our series, AI has no will of its own but simply performs the tasks it is given. Hence, AI is only as intelligent as its code, and that is about it.

Basically, you can compare AI to a kitchen knife. It is a tool which can render important services but has no will of its own or individual intelligence. We humans can control if the tool we have created ourselves turns into something dangerous or if we use it as a weapon against other people.

Preventing misuse

AI developers are well aware of its potential danger and the prevention of AI misuse is an important field of research. Scientists and developers in Germany and other countries are working towards providing robots with a certain code of conduct. Modern robots require sensors, cameras and defined limit values such as maximum deviations from the desired situation to implement this code of conduct. This would not be possible without the appropriate sensor technology since the robots would not realize that something is wrong.

Besides, there is nothing new about being scared of robots. About 70 years ago, Isaac Asimov already devised The Three Laws of Robotics in a short story.

Zeroth Law: A robot may not harm humanity, or, by inaction, allow humanity to come to harm.

First Law: A robot may not injure a human being, or, through inaction, allow a human being to come to harm, unless this would violate the Zeroth Law of Robotics.

Second Law: A robot must obey the orders given it by human beings except where such orders would conflict with the Zeroth or First Law.

Third law: A robot must protect its own existence as long as such protection does not conflict with the Zeroth, First or Second Law.

Even though these laws, or rather meta rules, still serve as a solid foundation for working with autonomous robots, additional laws and regulations are still required.

The first laws for robotics were adopted in the field of autonomous driving. A number of directives have also been issued concerning the use of robots across the EU. However, these directives do not govern the whole range of possible robot implementations. It will be important to elaborate and further develop these regulations in the future in order to ensure maximum safety and security. The biggest challenge in this regard is that universal rules for robotics are still lacking.

A promising approach to achieve standardized robot controls on a long-term basis is the TransAIR project launched by Professor Michael Beetz. Professor Beetz and his team have created the openEASE online platform where programmers can share code for robots in order to increase transparency and to create a universal database, thus preventing misuse and similar threats.

Lacking human intelligence

The second major weakness of intelligent machines is that they lack human intelligence and behaviour. Most people realise immediately that they are talking to or interacting with a machine. This is either because the machine’s voice does not sound natural or because its reactions and answers are sometimes illogical or out of context. However, major IT companies agree that this problem will be solved sooner or later.

We already predict when this problem will be officially solved, that is, once the so-called Turing test has been passed. This test does not set a specific date for the solution but establishes general conditions for the technical requirements to be fulfilled.

The Turing test: Human or machine?

The Turing test is a test of a machine’s ability to exhibit intelligent, human-like behaviour. It involves a human interlocutor communicating with a human and a machine without being able to see or hear them.

If the interrogator cannot reliably tell the machine from the human, the machine is said to possess independent intelligence. It is uncertain if this test addresses all problems arising from the use of AI and its lack of human intelligence. Should a machine pass the test, however, it would be a clear indicator of a significant scientific progress towards establishing AI and robotics in our everyday life.

0 Kommentare zu “What is AI still lacking to be intelligent and trustworthy?”